Week #4: Self-driving cars

28 Jan 2018Paper: End-to-end Learning of Driving Models from Large-scale Video Datasets, by Huazhe Xu et al. (arXiv, 2016) [pdf].

I was sitting in class last week and my professor mentioned the above paper with special affection. Given how often self-driving cars have been in the news lately, I figured this might be a good paper to get a feel for how these things work.

When I hear “self-driving cars,” I don’t know about you, but I immediately think about A.I. and neural networks. But I was pretty surprised to learn that neural networks are not that involved in deciding how autonomous cars move around.

Instead, neural networks are more commonly used to detect assistive information—things like where the other cars are, where the center of the lane is, etc. But even in this arena, they’re still not even that common. Instead, more traditional engineering approaches are the norm.

Self-driving car ingredients

To make a self-driving car, there are two key components: First, you need actuators, which translate the signals from the computer into physical control of your steering wheel, and the gas and brake pedals. (Here’s a terrifying idea: $1500 is all it takes to turn your normal car into a mostly-self-driving car.)

Second, you need a bunch of sensors. I mean, a lot of sensors. Uber’s self-driving Volvos, for example, have:

- 20 cameras

- 4 or so GPS devices

- intertial measurement unit, or IMU (tells you the relative orientation of the car itself)

- sonar (good for detecting cars at close range)

- radar (used for long-range car detection, but are easily fooled by tin cans)

- lidar (LIght + raDAR, used for measuring depth; gif)

If you’ve ever seen a self-driving car, you might have noticed a giant spinning thing on the top of it—that’s a lidar device. Apparently Uber engineers refer to it as “the chicken bucket,” because it looks like a giant, spinning, futuristic bucket of KFC chicken.

Roombas and the Robocar Grand Prix

Next, the data captured by all the sensors is likely processed by a suite of algorithms referred to broadly as SLAM, which stands for simultaneous localization and mapping. The problem SLAM solves is simple enough to describe: As your robot navigates the world, it has no idea about its environment or where it is in that environment. So SLAM combines information from all its sensors to build up a map of where it is in the world.

Everyone uses SLAM. Even the new Roomba vacuums use SLAM. But the first real success of SLAM came in 2005, when a car named Stanley used it to win the DARPA Grand Challenge.

The DARPA Grand Challenge was a competition set up to sponsor the development of autonomous vechicles. You can think of it as a sort of Robocar Grand Prix. To win the $2 million prize, your autonomous vehicle had to navigate a 7 mile course in the desert, totally unassisted. In 2004, the first year of the challenge, not a single team competed the race. The very next year, not only did five teams complete the race, but 22 of the 23 competitors got further than any other car did the previous year. That’s how fast this technology came to fruition.

Driving is easy

From one perspective, the task of making a self-driving car sounds insanely difficult: The car must avoid hitting other cars and pedestrians, stay in its lane, obey the laws, and handle a few ethical dilemmas—all of this while navigating a passenger quickly to their destination.

But from another perspective, an autonomous car’s job is very simple: All it has to do is decide how and when to turn the steering wheel, and how and when to press the brake and accelerator. Put it that way, and it sounds a whole lot less complicated than, say, becoming a professional ballerina.

This simpler perspective is where neural networks can come in. Current self-driving cars convert sensor data into driving instructions using a very hands-on, human-engineered approach. Neural networks, on the other hand, offer the possibility of developing autonomous cars in an “end-to-end” fashion. This is the idea that, provided we give our car enough sensors, the system should be able to learn all on its own the relationship between what it sees and how it should turn the wheel and press the brakes and gas. We don’t have to teach it explicitly about lanes or other cars or any of that. Sounds easy, right?

Neural networks for driving

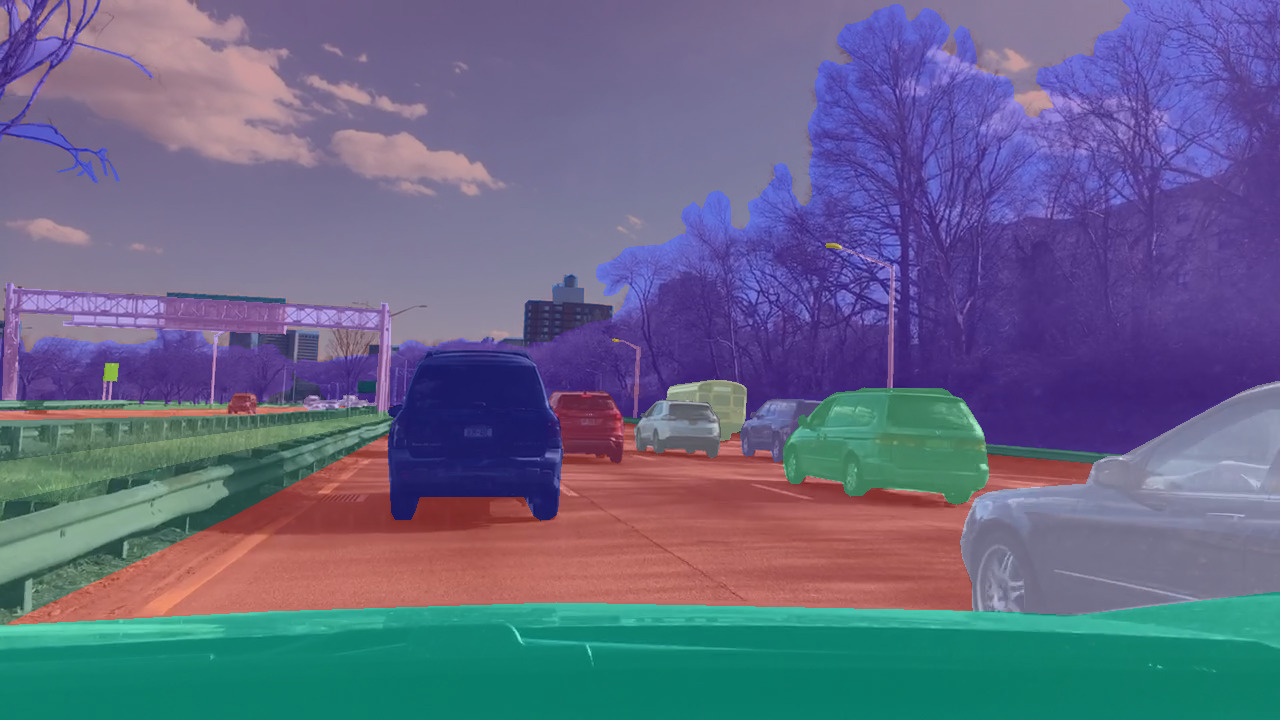

In this paper, the authors develop a neural network model that, at each point in time, translates an image from a video feed into a suitable action. The only actions the authors consider here are “straight”, “stop”, “left turn”, and “right turn”. Clearly we’re leaving out some important aspects of driving—say, “accelerate”—but the task turns out to be difficult enough even in this simplified setting.

You can think of their model as a function, called f. The function is trained to map an image, x, to the action, a, it believes is most reasonable: a = f(x).

If you’re not familiar with neural networks, when I say “function” I really do mean something like the f(x) = mx+b stuff you learned in school. Only here, instead of x being a single number, x is an image, which is represented as a list of numbers corresponding to the colors of every single pixel in the image. And instead of f being a linear function (as in mx+b), here we have a bunch of functions like this (see equation 4) combined together. Finally, computer scientists like catchy names, so instead of saying “fitting a curve to a bunch of data points” they call it “training.” And voilà, the A.I. apocalypse is upon us!

In order to train these networks, you need a lot of training data. So the authors introduce a data set they curated called the Berkeley DeepDrive Video dataset (BDDV), which comprises roughly 10,000 hours of dashcam video footage of cars driving in different cities, during different weather conditions, and so on. The largest data set before this one consisted of only 214 hours of driving, so this is a huge improvement.

The data set also includes additional measurements that can be used to figure out what the drivers in the videos did at each moment in time. So with this, when we give an image to the network, we can compare the network’s suggested action to what the human in the video did in the same situation.

Remember, the model only gets the video feed; it has absolutely no idea where the drivers in the videos are headed. It turns out that, even despite this complete ignorance of where it’s going, its predictions about when to turn, stop, or keep going are still 84% accurate. However, this isn’t as impressive at it might sound. You can get 80% accuracy knowing only the car’s speed (i.e., no neural network needed), presumably because cars only turn when they’re going slow. Given that, the fact that their network only adds an extra 4% of accuracy doesn’t seem like much of an accomplishment.

Clearly, the use of neural networks in self-driving cars is very much in its infancy (see here and here for a review). So I’m going to chalk up my professor’s fondness for this paper to its way of framing the problem: If we could really teach robo-cars how to drive solely by video instruction, that would be a whole lot easier than what we currently do. But we clearly have a very long way to go before that is possible.

Summary

- Topic: Translating images from a dashcam into driving instructions like “turn”, “stop”, etc.

- Why we care: Current autonomous vehicles incorporate rule-based driving policies, whereas learning-based policies might be better suited for handling complex or rare scenarios

- Conclusion: The authors use an extremely large data set of dashcam videos depicting cars driving in a variety of different conditions, along with a novel neural network model, to learn a mapping between images and driving instructions

Related links

- SLAM tutorial: link

- Evil cars in movies: link

- Team notes from the 2007 Darpa Urban Challenge: pdf

- There is a driving simulator called TORCS that is used in a lot of research on autonomous cars. Wikipedia tells me that “In December 2000 CNN placed TORCS among the ‘Top 10 Linux games for the holidays’.” What a selling point!